I occasionally use this Substack to write brief reviews of interesting books. My previous reviews include The Least of Us by Sam Quinones and The Snowball by Alice Schroeder.

Situational Awareness is the long form blog series by Leopold Aschenbrenner that has gobbled up the lion's share of AI online chit-chat over the past few weeks. In it, Aschenbrenner delivers his perspective on what the last 4 years of AI development mean for the next 4-6 years (a timespan in which he predicts both AGI and machine superintelligence), and the implications for capital investment, security, alignment, and geopolitics.

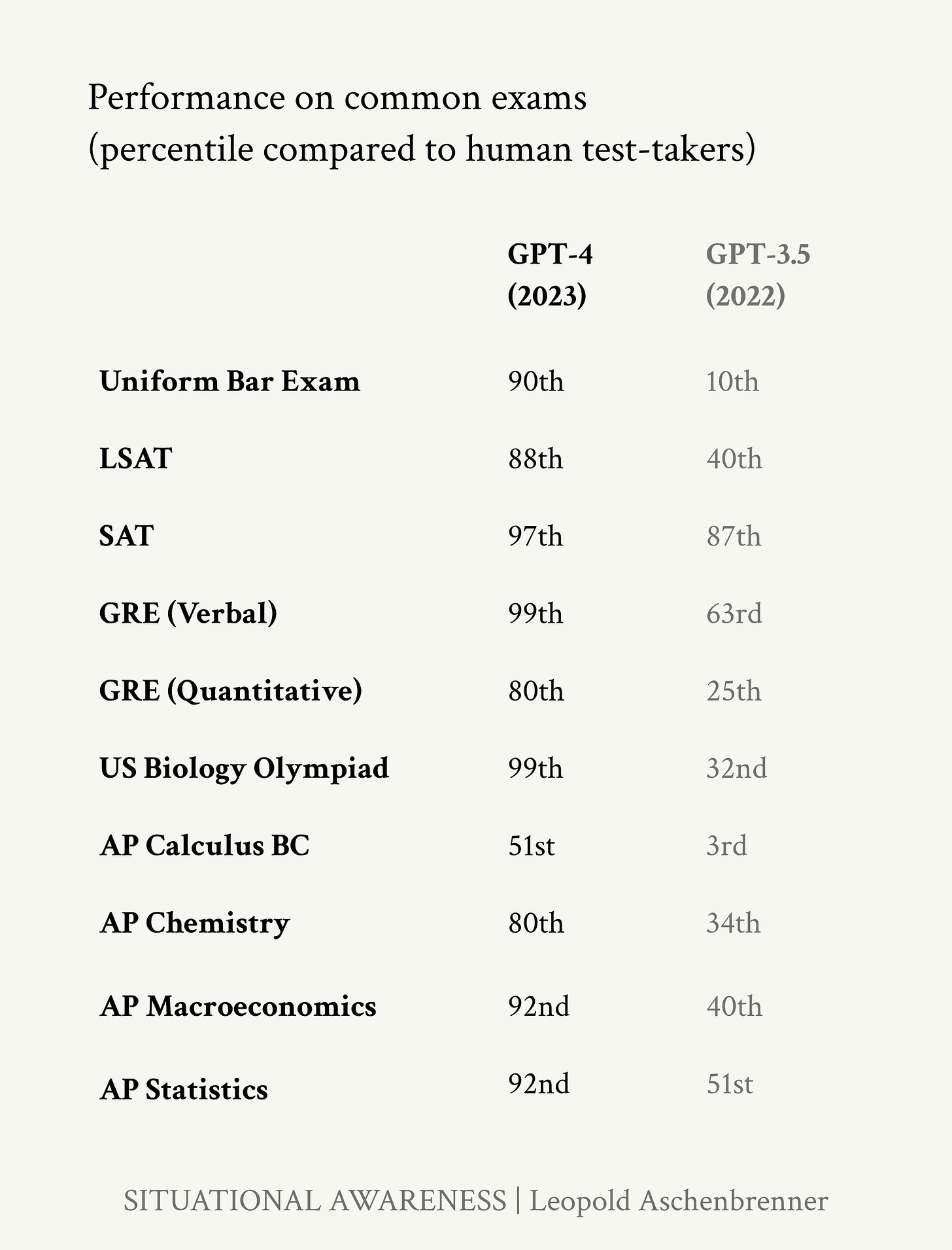

The strongest and most eye-opening portions of the series are the first two chapters (which also happen to be the longest and most fact-dense). Chapter 1, From GPT-4 to AGI, covers the last half decade of AI progress with an emphasis on just how under-appreciated and super-exponential the rate of improvement has been. In short, LLMs are (give or take) 100,000x more capable than they were in 2019, confounding critiques by various naysayers who say that nothing much interesting is going on here.

There's also a super informative breakdown of the various vectors by which these models improve. I'll just quote him directly so you can get the idea:

We can decompose the progress in the four years from GPT-2 to GPT-4 into three categories of scaleups:

Compute: We’re using much bigger computers to train these models.

Algorithmic efficiencies: There’s a continuous trend of algorithmic progress. Many of these act as “compute multipliers,” and we can put them on a unified scale of growing effective compute.

”Unhobbling” gains: By default, models learn a lot of amazing raw capabilities, but they are hobbled in all sorts of dumb ways, limiting their practical value. With simple algorithmic improvements like reinforcement learning from human feedback (RLHF), chain-of-thought (CoT), tools, and scaffolding, we can unlock significant latent capabilities.

This sort of stuff is extremely informative and eye-opening for the uninitiated (including me), and I love that his first essay is by far the longest and most substantive.

Part 2, From AGI to Superintelligence, rolls all of those trends forward and paints a picture of the kind of progress we should anticipate over the remainder of the decade; along with a discussion of potential bottlenecks and step function unlocks. Aschenbrenner gestures to the rate of progress in Part 1 and demonstrates just how weird the world gets if they continue only a few years longer. Put another way, if LLMs got 100,000x smarter over the last five years, how good will they get if we merely grant them another 1,000x improvement over the next five? How much “smarter” will they be than the current state of the art? Now how about 10,000x or 50,000x?

I have to say that this is pretty darn persuasive. Aschenbrenner has me fully convinced there's no scenario where the world isn't dramatically upended by AI by 2029, and that we're in for a weird and wild ride. If you just look at the technological factors and rate of progress, something that is AGI-like (or at least narrowly hyper-intelligent in enough domains to present as functionally AGI) seems inevitable.

Part 3 is where Aschenbrenner pivots away from laying out the "state of play" and into making concrete policy suggestions and predictions. This is where, IMO, the essay begins to depart from plausibility. A section I found particularly unpersuasive was IIIa, Racing to the Trillion-Dollar Cluster, where the author discusses the various things that "will" happen as a consequence of these exponential trendlines.

Here are a few things that Aschenbrenner predicts:

AI product revenue will surpass $100B/yr for individual companies like Google or Microsoft by 2026.

AI capital investment will total roughly $8T/yr by 2030.

Also in 2030, these data centers will be powered by an equivalent of 100% of 2024 U.S. electricity production.

Sorry, but this is Cathie Wood caliber forecasting. As anyone who watched the first Biden/Trump Presidential debacle (excuse me, I meant to type “debate”) can tell you, our institutions, political leadership, and ability to coordinate productively are currently so broken that the probability of infrastructure investment happening at anywhere near that magnitude is precisely zero. America is struggling to pull together the political will to build simple things like condos and railroads, we have runaway fiscal debt, and large segments of the populace seem to think that jailing Anthony Fauci and Hunter Biden is a higher priority than preparing for the singularity. We're not ready for this kind of ramp-up, not remotely1.

The author frequently assumes that just because some inputs to AI are growing exponentially (e.g. compute, model capabilities), most or all of the other inputs (e.g. size and cost of data centers, electricity production, chip manufacturing) are destined to follow the same path. This is waaaaaay too deterministic and almost completely ignores the chaotic human and political element that also has to go right to support all of this. What if there's a recession or runaway inflation or both? What about which party is in office? What if ROI on these investments plummets and Wall Street revolts? What if Iran launches a ground war against the Saudis? Not all exponentials are as much of a fait accompli as Moore's Law or LLM performance on the bar exam.

Aschenbrenner explains how he thinks about exponentials in the penultimate essay, The Project. He writes:

A turn-of-events seared into my memory is late February to mid-March of 2020. In those last weeks of February and early days of March, I was in utter despair: it seemed clear that we were on the covid-exponential: a plague was about to sweep the country, the collapse of our hospitals was imminent—and yet almost nobody took it seriously. The Mayor of New York was still dismissing Covid-fears as racism and encouraging people to go to Broadway shows. All I could do was buy masks and short the market.

And yet within just a few weeks, the entire country shut down and Congress had appropriated trillions of dollars (literally >10% of GDP). Seeing where the exponential might go ahead of time was too hard, but when the threat got close enough, existential enough, extraordinary forces were unleashed. The response was late, crude, blunt—but it came, and it was dramatic.

The next few years in AI will feel similar.

Aschenbrenner is correct that the ability to reflect on the future impact of exponentials is what separated those who thought "Covid is just a cold happening over there in Asia" from those who anticipated the devastation that was coming soon for America. This was important foresight, and the more alarmist someone was at that time, the smarter decisions they made for their community and family.

But he forgets that the limitations of being convinced by exponential models. If you were following the case and death counts in March/April of 2020 you probably noticed that if you just rolled the growth rates forward, Covid-19 could be expected to kill every single living American by approximately the middle of summer 2020.

That's obviously not what happened. What started off as an exponential growth curve eventually petered out into an S-curve as factors like herd immunity, public policy response, the continued mutation of the virus into less lethal strains, and vaccines became more important factors than merely projecting out the exponentials.

I love this analogy to Covid-19 infections because it provides a framework for thinking about how to analyze exponentials: Uninformed people under-rate exponentials, but highly informed people can become fixated on them and not anticipate exogenous reasons why the exponential might peter out. This answers the question of why places like OpenAI sometimes seem like apocalyptic death cults: If you're spending 8-12 hours a day working on something that is improving at a rate of approximately 10x per year, it's almost impossible to avoid imagining the end of the world in 5 or 10 years.

So we can say with some confidence that while the layperson drastically under-estimates the coming impact of AI, those closest to it (Aschenbrenner declares that he's one of only a few hundred people in the entire world who truly appreciates what's coming in AI2) are probably overconfident in their particular vision of the future.

If I had to bet what ends up happening, I would guess that nearly everything that Aschenbrenner predicts in Parts 1 and 2 about short- and mid-term AI capabilities ends up being correct, and very little of the "real world impact" he discusses in Part 3 ends up happening at anywhere near the magnitude and timeframe he predicts. I believe that the commercialization of AI products will be complicated, with lots of fits and starts and dead ends. And I also believe that chip and data center production will be driven more by cultural, competitive, and foreign policy factors than pure demand3. As for the U.S. government, the DoD, and the possibility of political will ushering in another Manhattan Project… I'm not holding my breath.

Overall I endorse reading Situational Awareness for anyone interested in having their mind expanded around what's coming in an AI-rich future. My recommendation is to take parts 1 and 2 with a very pinch of salt, and for part 3 you should consider gargling with the entire salt shaker.

One could of course easily retort that this is actually Aschenbrenner's point: That because our institutions are so weak and fragmented (particularly in the context of a supposedly looming threat from China) that what we need is more manifestos like "Situational Awareness" to rouse us from our slumber and energize a New Manhattan Project for AI. And hey — no disagreement from me there. Keep going, Leopold!

This is the footnote where I point out that Aschenbrenner, despite clearly being extremely bright and precocious, is only 22 years old. When I was 22 I was wrong about, well, basically everything. But then again I didn’t graduate as valedictorian of Columbia at age 19.

It's worth remembering that NVIDIA's revenues and earnings didn't spike purely because worldwide chip demand went stratospheric — it was that, AND because these AI computing tasks are especially well-suited for GPUs (not CPUs), and NVIDIA is pretty much the only game in town right now for those. Contrastingly, Intel and AMD's financial performance have gone nowhere over the past year. So you can't explain what's happening or predict the future without considering factors like market structure or the political environment.

To the point about Part 3 -- Very few people think appropriately about energy and energy economics, ie, not what's possible via equations, but what's probable based on the reality of deployment by political, fickle, job-hopping, returns-seeking, vacation-taking humans. The image that comes to mind is of a Very Important Person with very important business rushing up to the DMV. No matter your hurry and imperative, everyone has to wait for processing and that's as true for licenses as it is for transmission, pipelines, panels, mines and everything else in the value chain that generates the baseline energy units that get alchemized into intelligence.