Four hypotheses about generative AI

Every technology investment fund in the world has been having the same conversation over the past two months (minus a break for the SVB calamity): “How would we be investing in and around generative AI and large language models?”

I expect that most of us will be wrestling with that question for the remainder of 2023 (and perhaps the rest of our careers). Below I have sketched out a few working theses that I have been mulling over in my head. It would be great to hear what you all think about this, and if you agree or disagree.

(1) There will be a lot of value in playing defense against generative AI.

Most speculation about LLMs have focused on playing offense: Building products using it, incorporating it into workflows, layering applications on top of GPT-4, etc.

But think about what exactly these technologies create: Massive cheap quantities of human-seeming text and media. Imagine an absolute onslaught of this stuff, coming at you from every channel imaginable, while being increasingly clever in the ways they trick you, capture your attention, or bypass your defenses.

Enterprises will need to defend themselves against this onslaught, both from a security perspective and from an organizational/sanity perspective. This makes me feel like three categories will really prosper: Cybersecurity, work management (Asana, Monday, Trello, Airtable, etc), and data governance.

(2) Companies should start by using LLMs in internal software development processes before they work on incorporating them into customer-facing products.

I have no doubt that entrepreneurs are going to create incredibly fascinating and powerful new products that utilize AI to deliver better results for customers and end-users. However, this will take lots of experimentation -- recall that the iPhone app store opened in mid-2008 and it wasn’t until 2010 and onwards that killer apps began to surface.

In the meantime while people are figuring out these killer apps, what seems completely unambiguous is how transformational LLMs will be in the craft of software development. I mean, just look at these examples:

Utterly mind-blowing. This is a “get on board or get left in the dust” moment. Asking sometime to develop software without AI support will be like asking someone to close the books without giving them a computer.

(3) I‘m not sure if the LLM model builders themselves have a moat.

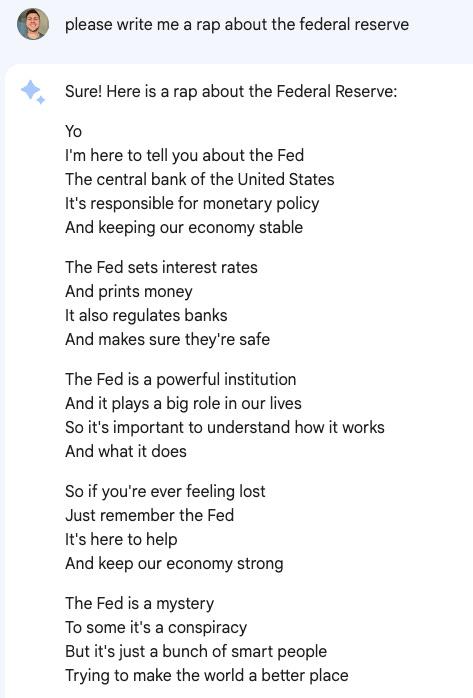

ChatGPT exploded onto the scene and blew everyone’s minds. Shortly thereafter, Google released Bard, which obviously sucks by comparison. Just have a look at how much better OpenAI is at rapping:

Not even close. ChatGPT 1, Bard 0.

But, don’t forget that OpenAI had a huge headstart here mostly due to the fact that Google invented this technology and then sat on their butts for 6 years while other firms got it into the public’s hands. As GPT-4 is insanely better than GPT-3 which was vastly better than GPT-2, the next few versions of Google’s Bard should improve by leaps and bounds.

Eventually all of the major LLMs will have been trained on however-many-trillions of parameters are necessary for them to all be equally very very very very good. So is there really going to be any first mover advantage retained by OpenAI? What if any entity (corporation, nation-state, whatever) that invests $5 billion or more to create a sufficiently powerful LLM can more or less have this technology? Will the underlying models themselves become an oligopoly, or a pure commodity?

And did you know that you can now run a halfway-decent open source LLM on a Raspberry Pi?

Unless one of these models blasts so far into the future that they jump from LLM to AGI to the singularity, it appears that the model builders will have an oligopoly at best and a commodity business at worst.

(4) What happens to APIs?

Managing APIs and third-party integrations has been the bane of many a tech company’s existence. I can think of a few examples of companies that had to spend more R&D effort on maintaining integrations than on launching new products.

But one of the most astounding things that LLMs can do is create code that handles interoperability issues the way APIs do, except using natural language. Example: “Take the patient’s vitals data from their device and import it to the electronic health records system”. Building and/or managing APIs to accomplish tasks like this used to take months; but now LLMs may be able to figure it out all on their own.

I’m not saying it’s as easy as merely asking the system to do it and letting it do its work. But… maybe it’s a lot closer to that than we think?